Lecture 4: Conditional Probability | Statistics 110

Harvard University・36 minutes read

The text covers the De Montmort matching problem, conditional probability, independence, and Bayes' rule in statistics and probability theory, emphasizing key concepts and applications in calculating probabilities and updating beliefs based on evidence. It explores scenarios involving dice outcomes, independence of events, and the fundamental importance of clear intuition in accurate probability calculations.

Insights

- Symmetry in the De Montmort matching problem simplifies calculations by allowing for term cancellations in probability calculations, leading to a clearer understanding of the game's winning probability.

- The concept of conditional probability, crucial for updating beliefs based on new evidence, is highlighted through the discussion of Newton's correct but flawed intuition in the Newton-Pepys problem, emphasizing the importance of accurate calculations and clear reasoning in decision-making and scientific analysis.

Get key ideas from YouTube videos. It’s free

Recent questions

What is the De Montmort matching problem?

The De Montmort matching problem involves flipping cards from a deck labeled 1 to n, aiming to match the card number with its label.

Related videos

Harvard University

Lecture 5: Conditioning Continued, Law of Total Probability | Statistics 110

The GCSE Maths Tutor

All of Probability in 30 Minutes!! Foundation & Higher Grades 4-9 Maths Revision | GCSE Maths Tutor

Harvard University

Lecture 7: Gambler's Ruin and Random Variables | Statistics 110

The Organic Chemistry Tutor

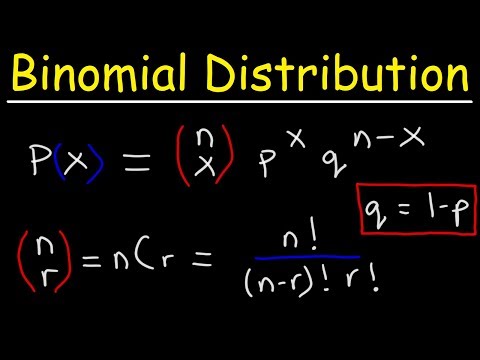

Finding The Probability of a Binomial Distribution Plus Mean & Standard Deviation

Gresham College

Lottery-Winning Maths

Summary

00:00

De Montmort Problem: Probability and Independence

- The speaker revisits the De Montmort matching problem, emphasizing inclusion-exclusion and independence.

- The main focus for the day is on conditional probability and conditioning.

- The De Montmort problem involves flipping cards from a deck labeled 1 to n, aiming to match the card number with its label.

- The probability of winning this game is calculated using inclusion-exclusion, involving the probability of unions and intersections.

- Symmetry simplifies the problem, allowing for cancellation of terms in the probability calculation.

- The probability of no matches is found by subtracting the probability of matches from 1.

- An approximate answer is derived using the Taylor series for e to the x, resulting in approximately 1/e or 0.37.

- The concept of independence is introduced, defined as events having no influence on each other, with the probability of their intersection equaling the product of their individual probabilities.

- Independence is contrasted with disjoint events, emphasizing their distinct meanings.

- For three events to be independent, they must be pairwise independent and satisfy an additional equation involving the probability of their intersection.

14:35

"Probability of Independent Events: Multiplication Method"

- When calculating the probability of independent events occurring together, the method is to multiply the probabilities of each event happening.

- Pairwise independence does not necessarily imply overall independence in all cases.

- Constructing counterexamples is a useful practice when dealing with definitions and concepts.

- The concept of pairwise independence is crucial, and all conditions must be met simultaneously for overall independence.

- For n events, the same principle of independence applies, with each pair and group of events needing to be independent.

- The probability of an intersection of events is found by multiplying the probabilities in all cases.

- The Newton-Pepys problem from 1693 involved determining the likelihood of specific outcomes with fair dice.

- The problem entailed finding the most probable outcome among scenarios involving rolling dice and obtaining certain results.

- The calculation of probabilities for each scenario involved considering independence and using complementary probabilities.

- Newton's correct calculation of probabilities in the problem highlighted the importance of both accurate calculations and clear intuition.

29:06

"Conditional Probability: Updating Beliefs with Evidence"

- Stigler argued that Newton's intuition was flawed because his argument did not rely on the fairness of dice.

- Changing the probabilities for the sides of dice could alter the likelihood of outcomes, challenging Newton's argument.

- Newton's argument remained invariant despite changes in probabilities, indicating a flaw in his reasoning.

- Conditional probability is the focus of the discussion, essential for updating beliefs based on new evidence.

- The process of updating probabilities is sequential, reflecting the continuous learning and adaptation in life.

- Conditioning, or updating beliefs based on new evidence, is fundamental in science, philosophy, and decision-making.

- The probability of A given B is defined as the probability of A and B divided by the probability of B.

- The concept of conditional probability can be illustrated through the pebble world or the frequentist world perspectives.

- In the pebble world, irrelevant outcomes are eliminated, and probabilities are renormalized to update beliefs.

- The frequentist world interprets probability as the long-run frequency of an event occurring in repeated experiments.

44:06

Theorems on Probability and Conditional Events

- To find the fraction of time A occurred when B occurred, focus on the circled experiments where B occurred, then calculate the fraction of those where A also occurred.

- Theorem 1 states that the probability of A and B intersecting is P(A given B) multiplied by P(B).

- Theorem 2 generalizes the probability of multiple events occurring by applying the conditional probabilities of each event successively.

- Theorem 3, known as Bayes' rule, relates P(A given B) to P(B given A) by dividing both sides by P(B), revealing the deep implications of this fundamental theorem in statistics.