Discrete Random Variable & Probability Mass Function | Statistics and Probability By GP Sir

Dr.Gajendra Purohit・23 minutes read

Dr. Gajendra Pohit's lecture covers discrete random variables, emphasizing the probability mass function (PMF) and cumulative distribution function (CDF) as essential tools for calculating probabilities in various contexts, including binomial and Poisson distributions. The session also introduces practical examples and calculations involving these concepts, reinforcing their importance for success in higher mathematics and competitive exams.

Insights

- Dr. Gajendra Pohit explains the distinction between discrete and continuous random variables, emphasizing that discrete variables, like the number of heads from coin tosses, can take specific values, while continuous variables can assume any value within a range. He highlights the importance of understanding the probability mass function (PMF) and cumulative distribution function (CDF) for calculating probabilities in various scenarios, including complex distributions like binomial and Poisson.

- The lecture provides practical examples to illustrate these concepts, such as calculating the PMF for drawing bad oranges from a box and determining probabilities from coin tosses. By mastering these foundational ideas, students are better equipped for success in higher mathematics and competitive exams, underscoring the relevance of engaging with the material actively.

Get key ideas from YouTube videos. It’s free

Recent questions

What is a discrete random variable?

A discrete random variable is a type of variable that can take on a countable number of distinct values. For example, when tossing a coin, the number of heads that can result from a series of tosses is a discrete random variable. It can only take specific values, such as 0, 1, or 2 heads when tossing the coin twice. This characteristic distinguishes discrete random variables from continuous random variables, which can assume any value within a given range. Understanding discrete random variables is fundamental in probability theory, as they are often used in various statistical analyses and experiments.

How do you calculate a probability mass function?

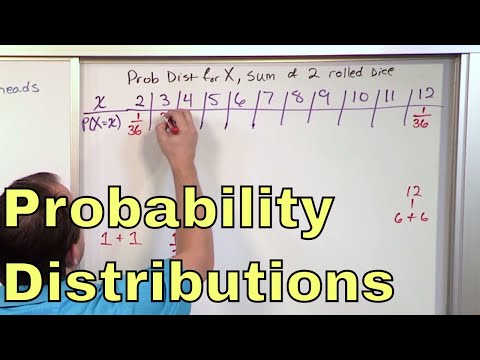

The probability mass function (PMF) is calculated by assigning probabilities to each possible value of a discrete random variable. To ensure that the PMF is valid, all assigned probabilities must be non-negative and must sum to one. For instance, if you have a discrete random variable representing the number of heads in two coin tosses, you would list all possible outcomes (HH, HT, TH, TT) and calculate the probability for each outcome. The PMF would then provide a clear representation of these probabilities, allowing for easier analysis and understanding of the random variable's behavior in different scenarios.

What is a cumulative distribution function?

The cumulative distribution function (CDF) is a function that describes the probability that a random variable takes on a value less than or equal to a specific point. It is calculated by summing the probabilities of all outcomes up to that point. For example, if you have a discrete random variable with a PMF, the CDF at a certain value x would be the sum of the probabilities for all values less than or equal to x. This function is particularly useful for understanding the distribution of probabilities across a range of values and is essential for various statistical applications, including hypothesis testing and confidence interval estimation.

What are examples of continuous random variables?

Continuous random variables are variables that can take any value within a specified range. Common examples include measurements such as height, weight, temperature, and time. Unlike discrete random variables, which have distinct and separate values, continuous random variables can assume an infinite number of values within an interval. For instance, the temperature in a room can be any value within a range, such as 20.5 degrees Celsius or 20.55 degrees Celsius. Understanding continuous random variables is crucial for advanced probability studies, as they are often modeled using various distributions, including normal, exponential, and uniform distributions.

Why is understanding PMF and CDF important?

Understanding the probability mass function (PMF) and cumulative distribution function (CDF) is vital for accurately calculating and interpreting probabilities in various statistical scenarios. The PMF provides a way to assign probabilities to discrete outcomes, while the CDF allows for the assessment of probabilities over a range of values. Mastery of these concepts is essential for success in higher mathematics and competitive exams, as they form the foundation for more complex topics such as binomial and Poisson distributions. Additionally, a solid grasp of PMF and CDF enables students to engage with real-world data and make informed decisions based on statistical analysis.

Related videos

Harvard University

Lecture 7: Gambler's Ruin and Random Variables | Statistics 110

MrNichollTV

Continuous Random Variables: Probability Density Functions

Dr.Gajendra Purohit

Discrete Mathematics in English | Finite State Machine - FSM Design | Digital Electronics By GP Sir

Math and Science

02 - Random Variables and Discrete Probability Distributions

Harvard University

Lecture 8: Random Variables and Their Distributions | Statistics 110

Summary

00:00

Understanding Discrete Random Variables and Distributions

- Dr. Gajendra Pohit introduces concepts of discrete random variables, probability mass function (PMF), and cumulative distribution function (CDF) in a mathematics-focused YouTube lecture for B.Sc. students and exam preparation.

- Discrete random variables can take specific values, such as the number of heads when tossing a coin, while continuous random variables can take any value within a range, like temperature readings.

- An example of a discrete random variable is tossing a coin twice, resulting in a sample space of four outcomes: HH, HT, TH, TT, with probabilities of 1/4, 2/4, and 1/4 for 0, 1, and 2 heads, respectively.

- The probability mass function (PMF) is defined as the function that assigns probabilities to discrete random variables, ensuring all probabilities are non-negative and sum to one.

- A practical example involves drawing two oranges from a box containing 4 bad and 16 good oranges, calculating the PMF for the number of bad oranges drawn using combinations.

- The cumulative distribution function (CDF) is introduced as the sum of probabilities for a random variable being less than or equal to a certain value, represented as F(x).

- For a random variable with a PMF, the CDF can be calculated by summing the probabilities for all values up to a specified point, illustrating with examples of probabilities between defined intervals.

- The lecture emphasizes the importance of understanding both PMF and CDF for calculating probabilities in various scenarios, including complex distributions like binomial and Poisson distributions.

- Continuous random variables are discussed, highlighting distributions such as uniform, normal, exponential, gamma, and beta distributions, which are essential for advanced probability studies.

- The session concludes with a reminder of the significance of mastering these concepts for success in higher mathematics and competitive exams, encouraging students to engage with the material actively.

13:04

Probability Calculations and Distributions Explained

- The calculation begins with adding values of k, resulting in 10k + 9k - 1, which simplifies to 10k - k - 1 = 0, yielding k values of 1/10 and -1.

- The probability distribution is established with values for k ranging from 0 to 7, where P2 equals 3, leading to P2 = 0.01 and P3 = 0.07, summing to 0.17.

- To find P5, the calculation involves 1 - (P6 + P7), where P6 is 0.02 and P7 is 0.17, resulting in a value of 0.81.

- For P(x ≥ 6), the values P6 and P7 are summed, yielding P6 = 0.02 and P7 = 0.17, resulting in 0.19.

- The probability of getting heads in four coin tosses is calculated, with the sample space being 2^4 = 16, leading to probabilities of heads occurring 0 to 4 times.

- The probability mass function is derived, with k values calculated as k = 1/49, and probabilities for P0, P1, P2, and P3 summed to find P(x < 4) = 15/49.

- For P5 and P6, the sum of probabilities is 24k, leading to a final probability of 24/49.

- The final calculation for P values greater than 3 and less than 6 results in 33/49, with additional resources provided for further study and exam preparation.