A conversation between Nassim Nicholas Taleb and Stephen Wolfram at the Wolfram Summer School 2021

Wolfram・176 minutes read

The project discussed aims to apply physics formalism to economics, introducing stochasticity in economic models to address limitations and proposing practical applications using Mathematica. The importance of risk management in navigating uncertainty, the flaws in relying solely on statistics in fields like medicine and finance, and the impact of individual differences and fat-tailed distributions on predictions are emphasized.

Insights

- Physics principles are being applied to economics, with stochasticity introduced to enhance understanding and address limitations of traditional models.

- The Law of Large Numbers and Central Limit Theorem are crucial in economics and statistics, allowing for Gaussian distribution use in large sums and concentrating averages with increasing samples.

- Market inefficiencies exist in finance, leading to arbitrage opportunities that can range from seconds to longer durations, with some discrepancies due to ownership structures.

- Traditional economic theories like Ricardo's comparative advantage are critiqued for not considering stochasticity, which should be incorporated into economic analysis.

- The necessity of a single currency for efficient economies is emphasized, ensuring price consistency and preventing arbitrage and volatility between currencies.

- Risk management supersedes complete scientific knowledge in importance, prioritizing survival and precautionary measures to protect essential layers of existence, including humanity and the planet.

Get key ideas from YouTube videos. It’s free

Recent questions

What is the central limit theorem?

The central limit theorem states that the sum of random variables tends towards a Gaussian distribution, allowing the use of a Gaussian distribution for large sums without delving into specifics.

Related videos

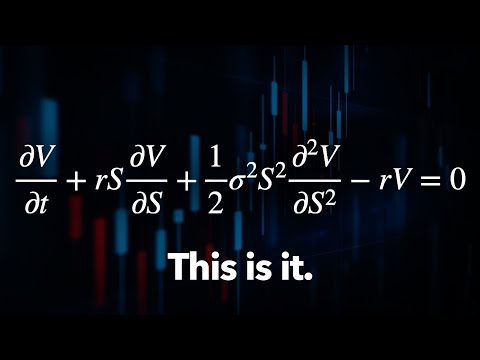

Veritasium

The Trillion Dollar Equation

Oxford Mathematics

Four Ways of Thinking: Statistical, Interactive, Chaotic and Complex - David Sumpter

MIT OpenCourseWare

16. Portfolio Management

Dear Sir

Maths in Real Life | Trigonometry/Algebra/Statistics/Mensuration/Calculas/Probability | Dear Sir

Khan Academy

Economic models | Basic economics concepts | AP Macroeconomics and Microeconomics | Khan Academy

Summary

00:00

"Physics principles applied to economics with stochasticity"

- The conversation begins with the speaker expressing gratitude for the participants and the purpose of discussing a physics project that has made significant progress.

- The project aims to apply the developed physics formalism to other fields like economics, seeking validation for this idea.

- The speaker questions the applicability of physics principles to economics, highlighting the complexity of economic theories and formulas.

- A layer of stochasticity is introduced in economics to enhance understanding, demonstrated through a heuristic involving pricing options at varying volatilities.

- The speaker shares a simple trick using Mathematica to price options at different volatilities, showcasing the impact on option prices.

- By manipulating the parameters in Mathematica, the speaker illustrates the concept of fat tails in Gaussian distributions and the effect of varying volatilities on option pricing.

- The introduction of a second layer of stochasticity in economics is proposed to address the limitations of traditional economic models.

- An example involving a grandmother's temperature preferences is used to explain the concept of stochasticity in decision-making.

- The speaker critiques traditional economic theories like Ricardo's comparative advantage, suggesting that stochasticity should be considered in economic analysis.

- The central limit theorem is explained using Mathematica, demonstrating how the sum of random variables tends towards a Gaussian distribution, challenging the belief that all distributions converge to Gaussian.

18:38

Theorems in economics and statistics explained

- Central Limit Theorem and Law of Large Numbers are two theorems in economics and statistics that work together.

- Central Limit Theorem allows the use of a Gaussian distribution for large sums without delving into specifics.

- Law of Large Numbers states that as sums increase, the average becomes more concentrated.

- Distribution of the average can be illustrated using a random variant like a uniform distribution.

- The Law of Large Numbers compresses the distribution of the mean as the sample size grows.

- In extremistan, conditions for the theorems may only hold at infinity.

- Pareto distribution, like the 80-20 rule, shows most values concentrated with a tail excursion.

- The cumulative distribution function of Pareto distribution demonstrates wealth concentration.

- The variance in distributions like Pareto can lead to significant fluctuations in means.

- To achieve the same stability as a Gaussian distribution with 30 observations, around 10^13 samples are needed.

36:47

Market inefficiencies and arbitrage in finance.

- In finance, making money involves converting assets in a loop, but inefficiencies can lead to opportunities for profit.

- Instances like Volkswagen owning Porsche have shown market value discrepancies due to ownership structures, creating inefficiencies.

- Market inefficiencies can exist temporarily, lasting from minutes to years, but generally, markets tend to correct themselves.

- Various arbitrage strategies exist, from currency options to statistical relationships, with some lasting seconds and others longer.

- Foundational economics theories, like those based on axioms or linear factors, may not always hold in real-world financial systems.

- Economic models often assume linearity, like in factor analysis, to simplify pricing and relationships between variables.

- The challenge in economics lies in dealing with high dimensionality and spurious correlations due to limited data per security.

- The law of large numbers and central limit theorem play crucial roles in aggregating data and understanding financial ensembles.

- The presence of tails in economic variables can lead to unpredictable outcomes, challenging traditional economic models.

- The necessity of a unit of account or home currency is essential for enforcing the law of one price and facilitating arbitrage in financial transactions.

53:36

"Exploring Economics, Physics, and Cryptocurrencies"

- The process of buying a book involves a chain of bots leading to providing food for a cat.

- Coherence in systems is compared to physics, where observers traveling at different speeds maintain system coherence.

- Time dilation in physics is explained as computational, affecting the ability to evolve in time based on computation used for spatial movement.

- Entropy in economics is discussed, with a book on entropy and economics taking 10 years to read 21 pages.

- Spatialization in economics is linked to connectivity, affecting dynamics and the fat-tailed nature of transactions.

- Arbitrage is viewed as the fundamental concept in the world, with differences in prices leading to arbitrage opportunities.

- Cryptocurrencies are discussed, with Bitcoin's value questioned based on its lack of utility for transactions and volatility.

- The value of stocks is explained through expected cash flows, contrasting with cryptocurrencies like Bitcoin.

- The utility of cryptocurrencies lies in computational contracts and autonomous execution in a purely computational domain.

- The valuation of cryptocurrencies is tied to their utility in enabling computational contracts and unique network capabilities.

01:11:00

Bitcoin's Volatility and US Dollar Stability

- Renting an apartment in Bitcoin requires income to be in Bitcoin, necessitating employers to have matching assets.

- Bitcoin's widespread adoption is hindered by government control over GDP and contracts.

- Volatility between currencies in commerce is typically low, around 5-7%, with some currencies like the Hong Kong dollar being close to zero.

- Bitcoin must have minimal volatility, close to zero, to be widely adopted, especially concerning the US dollar.

- Inflation is compensated by interest rates, historically aligning with the dollar, but not necessarily tracking the Consumer Price Index (CPI).

- Inflation index bonds track market expectations of CPI, not the actual CPI, leading to potential losses.

- Constructing portfolios with short bonds can help minimize inflation's impact on assets.

- The US dollar's inflation rate, around 3%, contrasts with Bitcoin's volatility, making the dollar more stable.

- A single currency is essential for economies to function efficiently, preventing arbitrage and volatility between currencies.

- The idea of a single numerator in economics is akin to coordinate charts in physics, ensuring price consistency across different currencies.

01:26:44

"Risk Management Over Science: Survival Imperative"

- Computational contracts and the concept of store of value are discussed, with doubts raised about the validity of claims regarding the necessity of store of value.

- The importance of looking for a "soccer problem" in financial narratives is highlighted, emphasizing the need to scrutinize coherent stories for potential flaws.

- The comparison between zero-sum assets like Bitcoin and value-generating assets like Amazon is made, questioning the fundamental story behind investing in Bitcoin.

- The suggestion is made to consider other cryptocurrencies like Ethereum, which may offer more value due to technological advancements over Bitcoin.

- The discussion shifts to the concept of computational irreducibility and its impact on risk management, highlighting the limitations of predicting outcomes even with complete scientific models.

- The distinction between science as a methodology and risk management as a survival strategy is emphasized, with the latter prioritizing survival over complete knowledge.

- The importance of precautionary measures to protect essential layers of existence, such as humanity and the planet, is stressed in risk management.

- The idea that risk management supersedes science in importance due to the instinct for survival and the need to avoid catastrophic outcomes is reiterated.

- The concept of absorbent barriers, including personal survival, species survival, and the preservation of the planet, is crucial in risk management decisions.

- The conclusion is drawn that while science may be incomplete, the imperative to survive and make decisions under uncertainty remains paramount, with risk management taking precedence over complete knowledge.

01:42:29

"Medicine's Statistical Approach and Individual Differences"

- The mechanism for risk management differs from scientific mechanisms.

- Science is often overused and abused, leading to misconceptions.

- Empirical science, especially in fields like medicine, relies heavily on statistical data.

- The law of large numbers and the central limit theorem are fundamental in statistical methodologies.

- Medicine's reliance on statistical averages can lead to misunderstandings and misinterpretations.

- Individual differences in medicine can significantly impact treatment outcomes.

- Medicine's aversion to theories hinders progress in understanding and treating diseases.

- Evidence-based medicine often fails to account for individual variations and specific circumstances.

- The history of medicine, rooted in a divide between surgeons and theologians, influences current practices.

- The statistical approach in medicine can be flawed without a deep understanding of the underlying data and methodologies.

01:58:12

Statistics: Stochastic Nature and Misuse in Research

- The p-value is a random variable, with a true p-value of 0.12 indicating a distribution around 0.12.

- Repeating an experiment multiple times can yield different p-values, showing its stochastic nature.

- A distinction is made between probability-driven statisticians and cookbook statisticians in various fields like finance and medicine.

- Misuse of statistical concepts without understanding their derivation is a prevalent issue.

- Medicine often relies on statistical certainty from clinical trials, seeking scientific authority.

- The reliance on statistics in fields like psychology, medicine, economics, and finance is criticized.

- The importance of proper control groups in studies, like the hydroxychloroquine case, is highlighted.

- Clinical trials face challenges in aggregating diverse populations and determining trial eligibility.

- The flaws in using statistics to generalize results from clinical trials are discussed.

- The limitations of statistics in predicting human behavior are acknowledged, with a call for a deeper understanding of individual differences.

02:14:19

"Science vs. Medicine: Theoretical vs. Empirical"

- Newtonian theory allows for a general model to understand how apples fall without cataloging each instance.

- Physics and mathematics build towers of theories based on underlying principles, unlike medicine.

- Clinical experience in medicine, not empirical evidence, often guides successful treatment decisions.

- Medicine lacks a theoretical approach, relying on empirical evidence that may not always be effective.

- Ancient texts on medicine focused on theoretical statements rather than clinical experience.

- Engineering in ancient times had effective procedures and recipes, unlike medicine.

- Engineering manuals were not preserved as well as theoretical works, similar to software engineering today.

- Science sometimes precedes technology, as seen in the development of rockets and jet engines.

- The variance in pandemic predictions highlights the need to consider fat-tailed distributions and avoid single point estimates.

- Scientific results show that pandemics have fat-tailed distributions, challenging traditional prediction methods.

02:30:06

"Predictions, Models, and Statistics in Science"

- In dynamic processes, predictions can be self-cancelling or self-fulfilling based on feedback from people.

- Models created by Diego and his son using cellular automata were more robust and revealing than academic models.

- The discussion revolves around computational irreducibility and the uncertainty in modeling the pandemic.

- Medicine is non-repeatable due to individual differences, unlike physics where every electron is the same.

- Statistics can provide a false sense of scientific validity, leading to misguided conclusions.

- Probability distributions should be used correctly, considering the entire distribution and not just specific metrics.

- Doctors' probability estimates can be influenced by their experience, with young doctors overestimating rare diseases and older doctors overestimating common diseases.

- The misuse of statistics, especially in psychology, can lead to errors in interpreting data.

- Non-ergodicity theories in economics challenge the reliance on averages and highlight the importance of understanding time averages versus vertical averages.

- The discussion transitions to the importance of working with averages in science and the potential errors in mistaking different types of averages.

02:46:19

Casino Betting, Inequality, and Social Mobility

- The average expected return from the casino can be calculated by gambling for a certain number of bets, such as eight hours, to determine the return.

- Casinos are built on probability, making them predictable, unlike the rest of the world.

- Sending a single trader to the casino for 100 days can provide a different perspective, even with a positive expected return.

- The arithmetic average in casino betting is impacted differently by bankruptcies compared to a sequence of traders, requiring a strategy that compounds returns.

- Inequality should be measured dynamically over time, considering how many Americans spend time in the top one percent or ten percent.

- Mental accounting in casinos involves treating money won differently to survive and make strategic bets.

- Time averages versus ensemble averages are crucial in physics but often overlooked in economics, leading to errors in understanding risk management and inequality.

- The Gini index can increase over time due to super-additivity, impacting how inequality is measured in different populations.

- A Markov chain approach is essential to understanding social mobility and quantifying how people move between different economic classes over time.

- Crashes in markets can lead to reallocation of wealth and opportunities, affecting social mobility and the distribution of resources in society.

03:02:55

"Risk Management: Avoiding Ruin in Finance"

- Russian roulette is not harmless, unlike a scenario with an 80% chance of making money and a 20% chance of dying.

- Repeatedly playing Russian roulette leads to inevitable ruin due to the high risk involved.

- Risk management is crucial in situations of uncertainty to avoid ruin.

- Ergodicity, a concept often used in physics, is not always applicable, especially in cases involving absorbing barriers like bankruptcy.

- Long-Term Capital Management, a fund with Nobel Prize-winning members, failed due to ignoring ruin and taking excessive risks.

- Traders who overlook ruin or underestimate its impact often face significant losses, as seen in the case of Long-Term Capital Management.

- Financial institutions that lack skin in the game, unlike hedge funds, are more prone to failure due to their risk-taking strategies.

- The Volcker Rule, which restricts banks from trading, has led to a transfer of risk to hedge funds, enhancing their resilience.

- Survival mechanisms, like those seen in capitalism, are more effective than peer review systems in ensuring quality and success.

- The Law of One Price in economics is not a strict law but a guideline that highlights the importance of price consistency across markets, contingent on factors like transaction costs and barriers to trade.

03:18:36

Key Decisions Prevent Bankruptcy, Ensure Profit

- Making good decisions is crucial to avoid bankruptcy even on seemingly excellent trades.

- Converting puts to calls can lead to financial losses if not managed correctly.

- Tight liquidity can impact arbitrage opportunities, especially in currency trading.

- Passive arbitrage involves buying cheaper goods from one location and selling them at a higher price elsewhere.

- Active arbitrage involves modifying products to increase their perceived value.

- Valuation and price are distinct concepts, with valuation often differing from market price.

- Uncertainty in climate models should lead to cautious decision-making regarding pollution.

- Automated trading models can be successful in short-term trading but may struggle with long-term predictions.